Meta is rolling out new safety measures for teens across its platforms, extending the protections previously launched on Instagram to both Facebook and Messenger. The move comes as part of the company’s broader effort to make its apps more age-appropriate and offer parents additional tools to oversee how their kids engage with social media.

The latest update brings Instagram’s Teen Account protections—originally introduced in September 2024—to other parts of Meta’s ecosystem. These accounts are tailored for users under 16, automatically setting their profiles to private and filtering out harmful or unwanted content through features like the hidden words tool, which blocks problematic comments and DM requests.

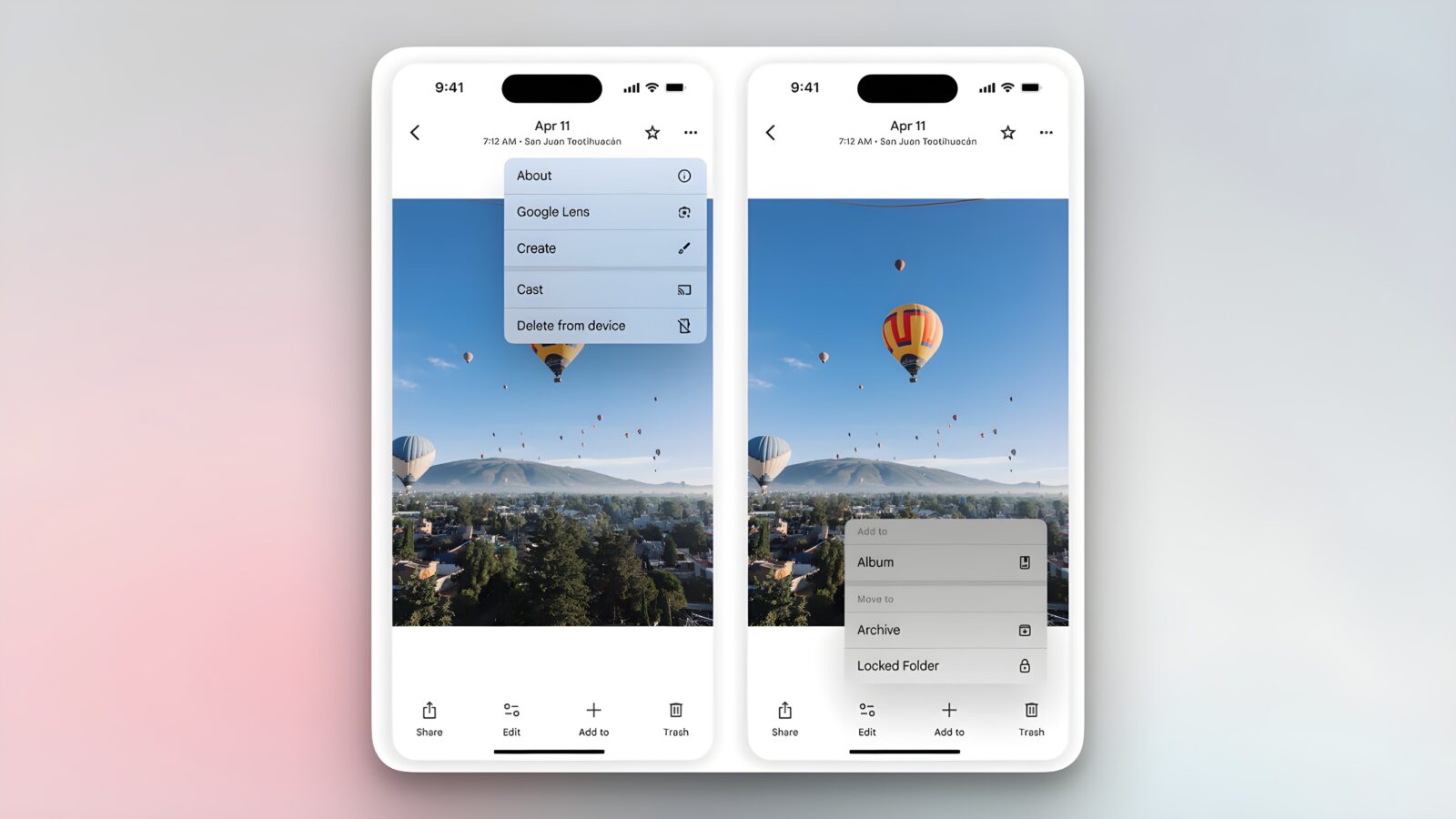

One of the biggest changes includes restrictions on live streaming and content visibility. Teens will no longer be able to go live or disable automatic blurring on potentially sensitive images in direct messages without parental consent. These features are designed to reduce the chances of inappropriate exposure and limit risky interactions, especially in private communications.

According to Meta, over 54 million Instagram users have already been transitioned into Teen Accounts. The company said that 97% of teens aged 13 to 15 have maintained the default safety settings, which suggests a relatively high level of adoption. While these numbers sound promising, Meta did not provide detailed data on how these figures were collected or which countries were included.

Parents appear to be generally supportive. In a company-commissioned survey conducted by Ipsos, 94% of surveyed parents said the protections were useful, and 85% felt they helped teens have better experiences on the platform. However, the exact sample size and demographics were not disclosed.

The rollout aligns with increasing pressure on social media companies to provide stronger parental controls and age-specific safety features. Children’s safety advocates have long pushed for more robust safeguards, especially as platforms face growing scrutiny over their impact on mental health and privacy. Other companies are following suit, with TikTok recently updating its own parental control settings.

As digital safety becomes a priority for regulators and families alike, more social platforms may need to rethink how young users interact online—and whether age-appropriate experiences should be the standard, not the exception.