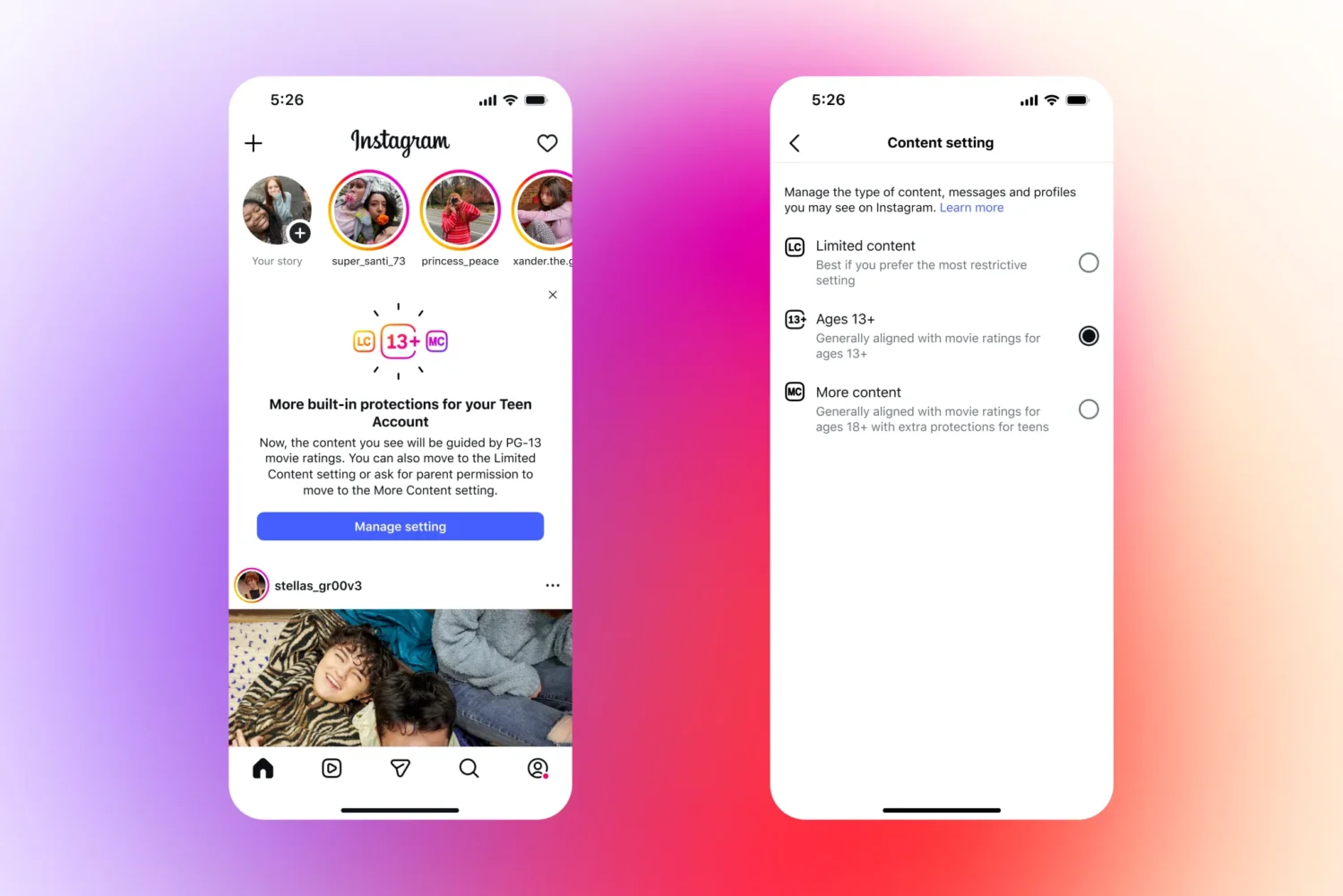

Instagram is tightening its safety measures for younger users by introducing new PG-13 content restrictions and expanded parental controls. Beginning this week, all users under 18 will, by default, be limited to viewing posts that align with PG-13 movie standards—excluding material depicting explicit violence, sexual content, or graphic drug use. Teens won’t be able to change this setting without verified approval from a parent or guardian.

The new policy marks one of Instagram’s most comprehensive efforts yet to regulate what minors can access on the platform. It builds on Meta’s broader teen safety framework, which already includes limits on direct messages, search results, and recommendations tied to sensitive topics such as eating disorders and self-harm.

In addition to the PG-13 filter, Instagram is introducing a stricter “Limited Content” setting that prevents teens from viewing or posting comments on certain posts flagged by the platform. This filter will also extend to AI-powered interactions. Starting in 2026, conversations between teens and AI chatbots with the Limited Content setting will be further restricted, continuing Meta’s recent focus on tightening AI safety for minors.

The timing of the rollout comes amid growing scrutiny of AI chat platforms. Both OpenAI and Character.AI have faced lawsuits alleging harm to users, and each company recently implemented new safeguards—such as prohibiting flirtatious interactions and adding parental control options. Instagram’s decision to apply similar moderation principles reflects a wider industry move toward stricter digital welfare standards for under-18 users.

The platform is also updating how it handles inappropriate accounts and content visibility. Teen users will be blocked from following or engaging with profiles that share adult or otherwise unsuitable material. If they already follow such accounts, Instagram will hide that content from their feeds and disable interactions. These accounts will also be excluded from recommendations and search results.

To strengthen parental oversight, Instagram is testing new supervision tools that allow parents to flag specific posts or accounts they believe should not be recommended to teens. These reports will be reviewed by Instagram’s moderation team. The platform has also refined its language filters, now blocking keywords such as “alcohol” and “gore,” and accounting for deliberate misspellings used to evade detection.

The updated teen protections are rolling out first in the U.S., U.K., Australia, and Canada, with a global launch planned for 2026.