If your ChatGPT account has recently started refusing certain requests or prompting you to verify your age, the change is likely tied to a broader update now rolling out across consumer plans. OpenAI has begun actively predicting user age based on account behavior, with the aim of determining whether an account likely belongs to someone under 18 and applying a different set of safeguards when it does.

According to OpenAI, this age prediction system is designed to support a more structured separation between adult and teen experiences on ChatGPT. The company says it wants to offer younger users a version of the service that reduces exposure to sensitive material, while allowing adults to use the platform with fewer guardrails, within established safety limits.

Age prediction builds on controls that already exist. Users who declare themselves under 18 during signup automatically receive additional restrictions. The new system attempts to identify cases where the declared age may not match how an account behaves over time. To do this, the model evaluates a mix of behavioral and account-level signals, including how long the account has existed, typical times of day it is used, long-term usage patterns, and the age provided by the user. OpenAI describes the rollout as iterative, using real-world signals to refine accuracy over time rather than treating the model as finished.

When the system estimates that an account may belong to a minor, ChatGPT defaults to a more restrictive experience. These safeguards are intended to limit access to content such as graphic violence, sexual or romantic roleplay, depictions of self-harm, viral challenges that could encourage risky behavior, and material that promotes extreme beauty standards or unhealthy dieting. OpenAI says this approach is informed by research into adolescent development, including differences in impulse control, risk perception, and emotional regulation.

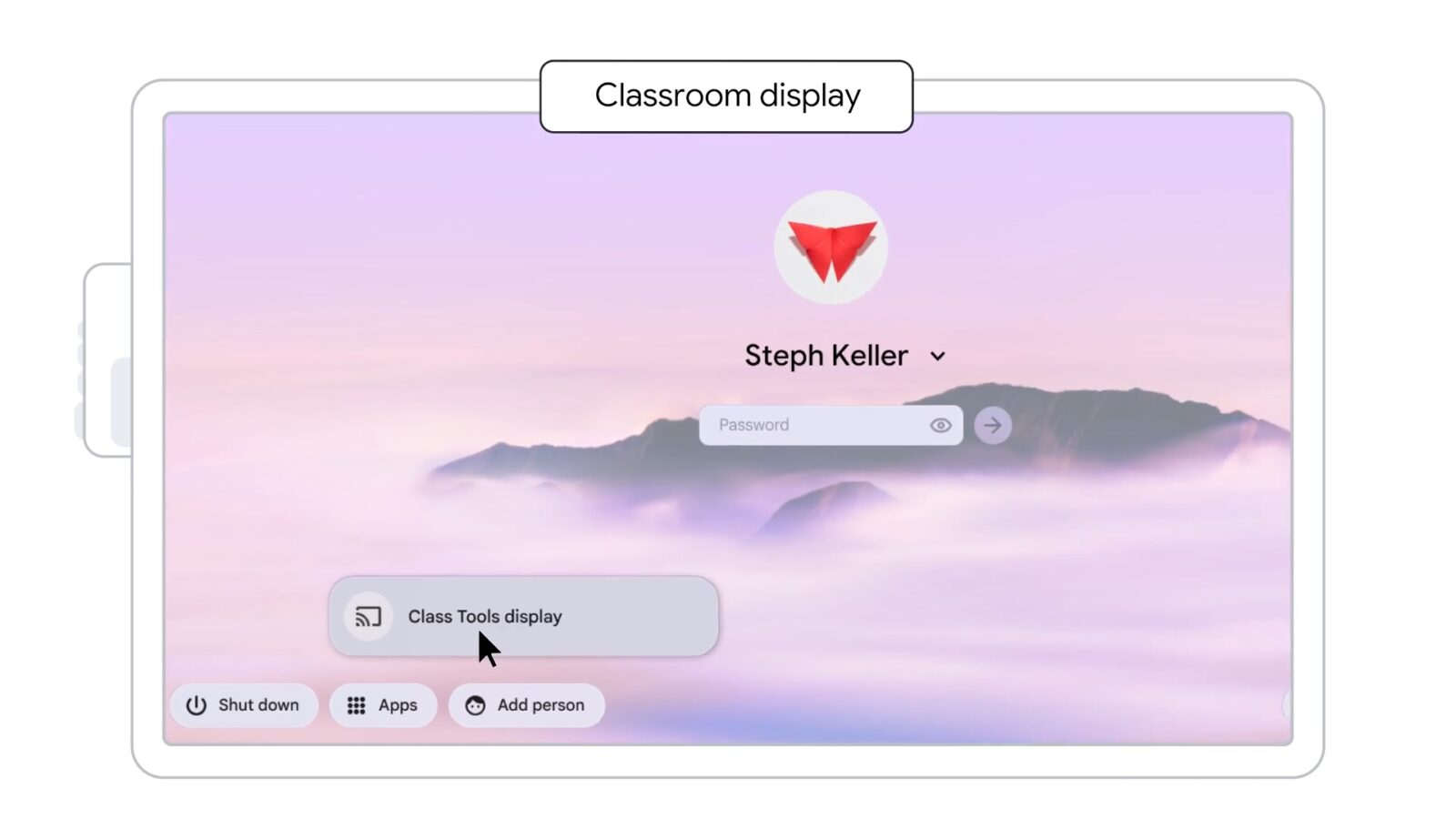

Users who believe their account has been incorrectly classified are offered a way to restore full access. Verification is handled through Persona, which allows users to confirm their age with a selfie and, in some cases, government-issued identification. OpenAI describes this as a fast and simple process, though some users remain uncomfortable with providing personal data to a third-party service, even one that encrypts information and limits data retention. Accounts can initiate this process at any time through the settings menu.

In addition to automated safeguards, OpenAI is also expanding optional parental controls for teen accounts. These include features such as setting quiet hours when ChatGPT cannot be used, managing whether interactions contribute to model training, and receiving notifications if the system detects signs of acute distress. The company says that when age signals are unclear or incomplete, it intentionally defaults to the safer, more restricted experience.

The rollout is ongoing, with regional considerations slowing deployment in some areas, including parts of the EU. OpenAI says it will continue refining the system and sharing updates as it learns from early results, working alongside external groups such as the American Psychological Association and other safety-focused organizations.

For users, the practical takeaway is straightforward. If ChatGPT suddenly feels more limited, it is likely the result of automated age estimation rather than a policy violation. While verification remains the only reliable way to reverse restrictions, the broader shift signals a future where access to AI tools is increasingly shaped by inferred identity and risk profiles, not just what users say about themselves.