Google has begun rolling out a major Gemini-powered update to Google Maps, introducing four new AI features designed to make navigation and local discovery more natural and conversational. While earlier leaks hinted at full-fledged chat-like interactions within Maps, the reality focuses on practical tools that integrate Gemini’s conversational intelligence directly into real-world navigation and search.

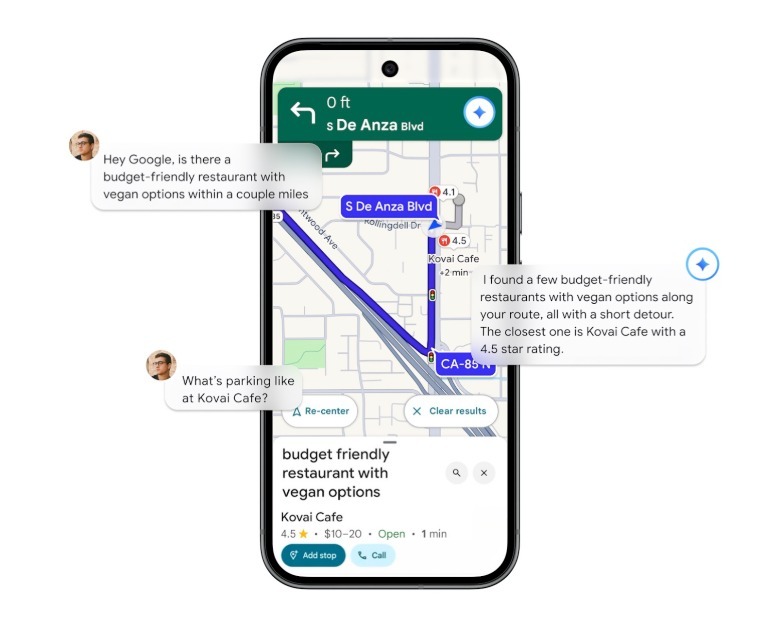

The first feature is voice-based, hands-free communication with Gemini while driving. Instead of just receiving directions, users can now ask context-aware questions through natural speech—such as finding a nearby restaurant, checking parking availability, or adding a stop along the route—and Maps will respond in real time. This conversational layer essentially turns Gemini into a digital copilot, able to make navigation adjustments, find stops, and even add events to Google Calendar, all without taking your hands off the wheel. The feature will arrive for Android and iPhone users in the coming weeks.

The second upgrade changes how directions are delivered. Instead of relying solely on distance-based prompts like “turn right in 50 feet,” Gemini will use recognizable landmarks for clearer guidance. Directions might now sound like, “turn right after the Thai Siam Restaurant” or “continue past the red church.” These references will also appear visually on-screen, reducing confusion in dense urban areas. The landmark-based guidance is launching first in the United States.

A third Gemini feature focuses on proactive traffic alerts. Even when you’re not actively navigating, Maps can now notify you of traffic disruptions or delays on familiar routes, using AI to predict and report issues before you encounter them. This aims to make the app more useful for daily commuters who keep it running in the background.

Finally, Google has expanded Gemini’s visual search through Google Lens integration. Users can point their phone’s camera at a location while in Maps, tap the search bar’s camera icon, and ask questions by voice—such as why a restaurant is popular or what dishes it’s known for. Gemini will then provide contextual information on-screen, offering a more immersive way to explore unfamiliar areas. This feature is expected to roll out across Android and iOS devices in the U.S. later this month.

Collectively, these Gemini updates reflect Google’s effort to make Maps more conversational, predictive, and visually intelligent—bridging the gap between traditional navigation and real-time AI assistance.