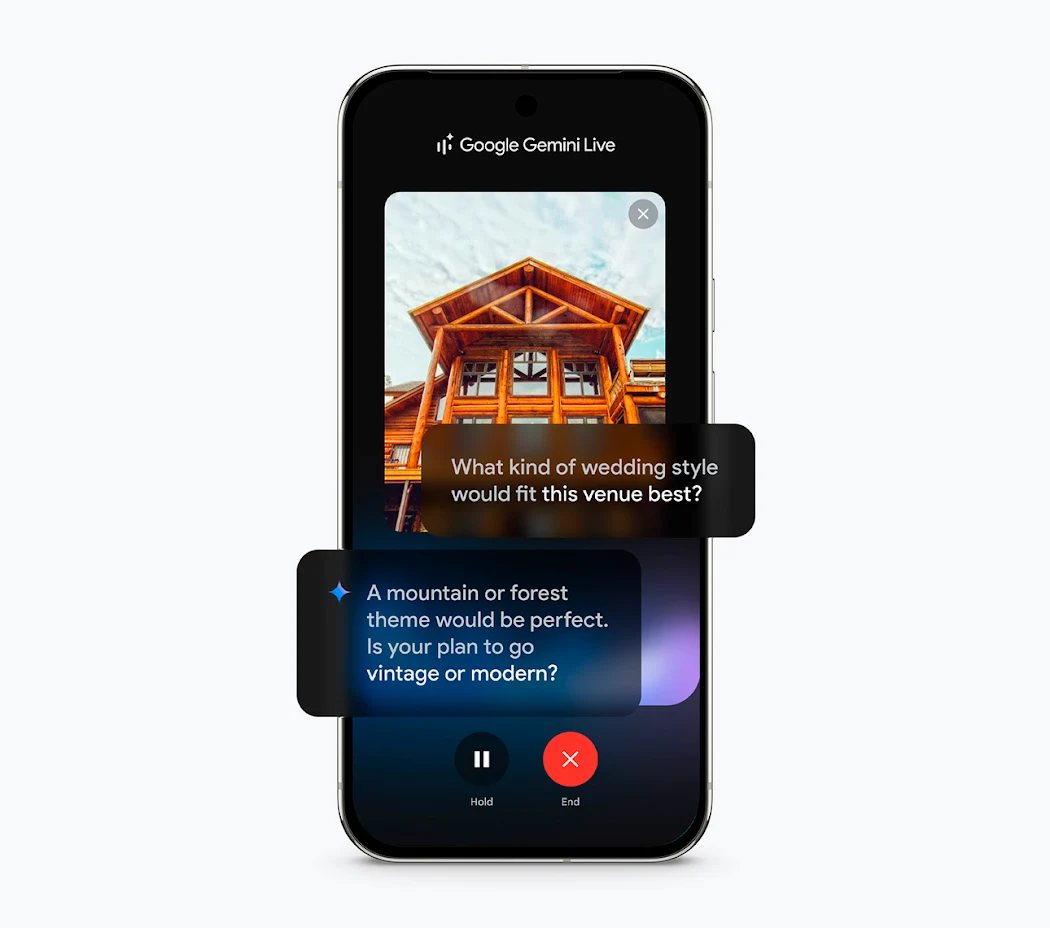

Google is pushing its Gemini Live AI deeper into everyday smartphone use, expanding beyond demonstrations of conversational abilities into more practical mobile integration. Announced alongside the Pixel 10, the update brings Gemini Live into Android’s core productivity and communication apps, positioning it as a true voice-first assistant rather than just a novelty.

Until now, Gemini Live has been framed as an AI you can talk to casually, one that can also “see” the world through your phone’s camera and interpret it. That showcase element grabbed headlines, but its usefulness was limited without real app-level integration. With this update, Gemini Live can now interact directly with Calendar, Keep, and Tasks. Instead of opening an app, tapping through menus, or typing, users can simply say things like, “Add a note about picking up cabbage from the store” or “Schedule a meeting with John at 4 p.m. Saturday,” and the AI will execute the action in the background.

The approach mirrors how Gemini has already been embedded into Google’s larger ecosystem across Docs, Gmail, and Search. Extending that same connectivity to mobile apps means Android users may soon rely less on manual inputs and more on natural conversation. The expansion also highlights Google’s ongoing strategy of positioning Gemini as a unifying layer across its services, rather than just a standalone chatbot.

Looking ahead, Google plans to extend Gemini Live’s functionality to Messages, Phone, and Clock, while also deepening integration with Google Maps. These moves signal a shift toward treating Gemini Live as a central voice-based interface for the operating system itself. For users, that could mean drafting texts, setting alarms, and navigating routes entirely by conversation.

Google has also hinted at technical improvements behind the scenes, including updated models designed to make conversations flow more naturally. One example is a new setting that lets users adjust Gemini Live’s speaking pace to match their note-taking speed—a small but practical feature for those who use AI in real-time contexts.

What remains to be seen is how seamlessly these integrations work in daily use, and whether Gemini Live can handle the inconsistencies of real-world communication without frustrating users. Voice-first interactions have long been promised by tech companies, but adoption often stalls when assistants fail to understand context or require repeated corrections. If Gemini Live can avoid those pitfalls, it could mark a meaningful step toward AI becoming a default interface on mobile devices.