Apple is drawing attention to how developers are adopting its Foundation Models framework to integrate artificial intelligence into everyday apps, following the release of iOS 26, iPadOS 26, and macOS Tahoe 26. The framework gives developers access to an on-device large language model, the core of Apple’s broader Apple Intelligence system.

Unlike many cloud-based AI platforms, this setup runs directly on a user’s device. That means new features can work offline, offer tighter privacy protections, and reduce costs for developers by removing the need for external inference servers. These benefits are beginning to surface in a range of apps spanning fitness, education, mental health, and productivity.

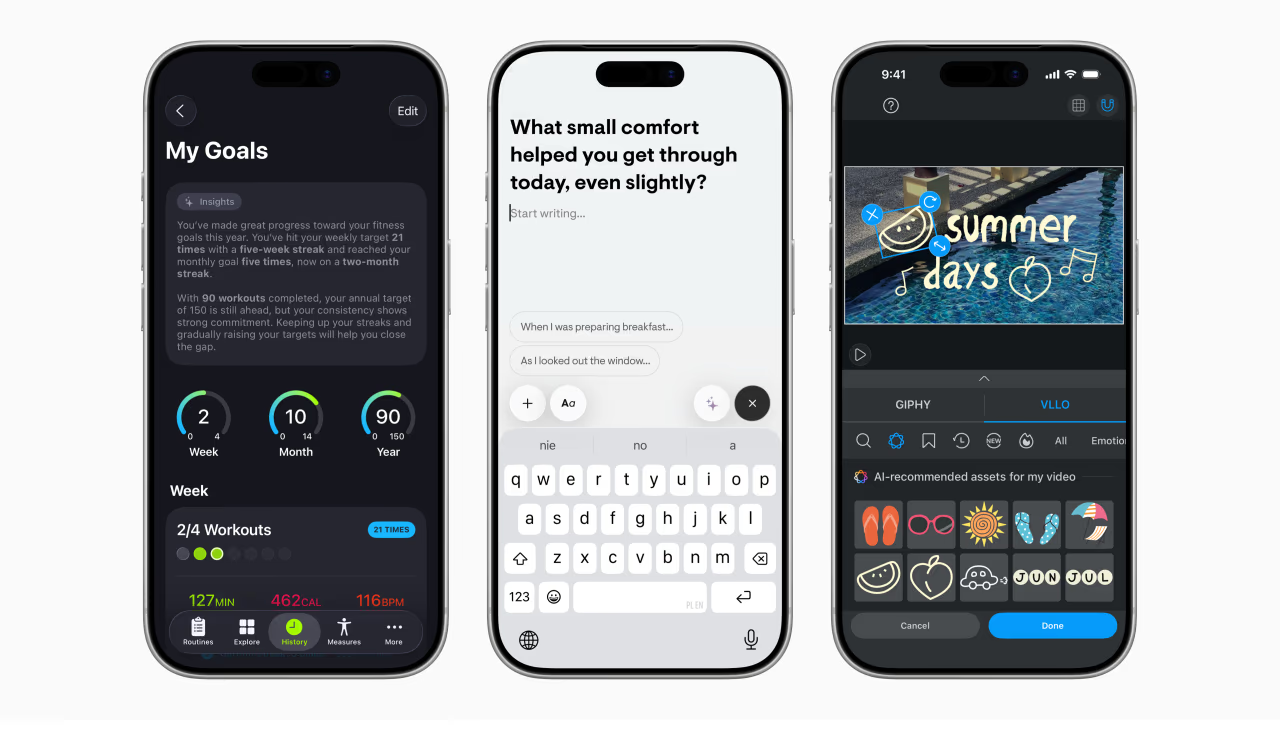

Examples include SmartGym, which now generates custom workout plans from plain text requests and offers feedback designed to feel like coaching. The journaling app Stoic uses the framework to craft writing prompts linked to a user’s previous entries, keeping all data on the device rather than in the cloud. In the classroom, CellWalk adapts scientific explanations to suit different knowledge levels, presenting biology concepts in more approachable language. Productivity-focused app Stuff can convert voice or handwriting into structured tasks with dates and tags automatically filled in. Meanwhile, video editor VLLO combines the Foundation Models framework with Apple’s Vision tools to analyze footage and propose background music or visual elements.

Apple has integrated the framework into Swift, its primary development language, giving app creators a more direct path to building AI-driven tools. The on-device model currently includes three billion parameters and runs on any device compatible with Apple Intelligence, provided it is updated to the latest operating systems.

By keeping the model local, Apple is positioning its AI approach as both cost-efficient for developers and more private for users. What remains to be seen is how widely developers will adopt the framework and whether the on-device model is powerful enough to keep up with the rapid pace of AI development elsewhere, particularly from cloud-first competitors.