Google is expanding its use of AI to improve digital accessibility across Android and Chrome, with a particular focus on users with vision and hearing impairments. The company’s Gemini AI, which started as a tool to describe images within Android’s TalkBack system, is now being developed into a more conversational assistant for navigating visual content and web pages.

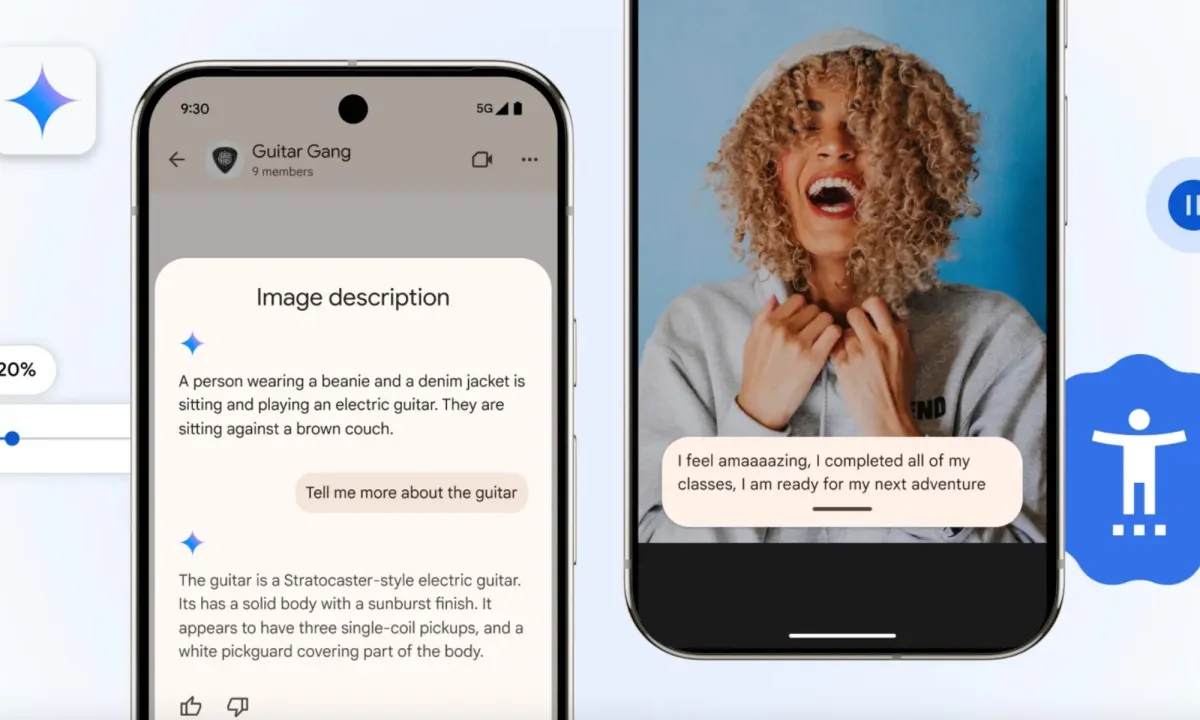

Originally introduced in 2024, Gemini brought more descriptive context to images, enhancing TalkBack’s basic screen-reading capabilities. With the latest update, users can now ask follow-up questions about images they encounter on their device. This adds a conversational layer to accessibility, making it easier for people to understand not just what’s on the screen, but also the context surrounding it. For instance, someone receiving a photo of a guitar can now ask for its make, color, or identify other items in the frame—all through voice interaction.

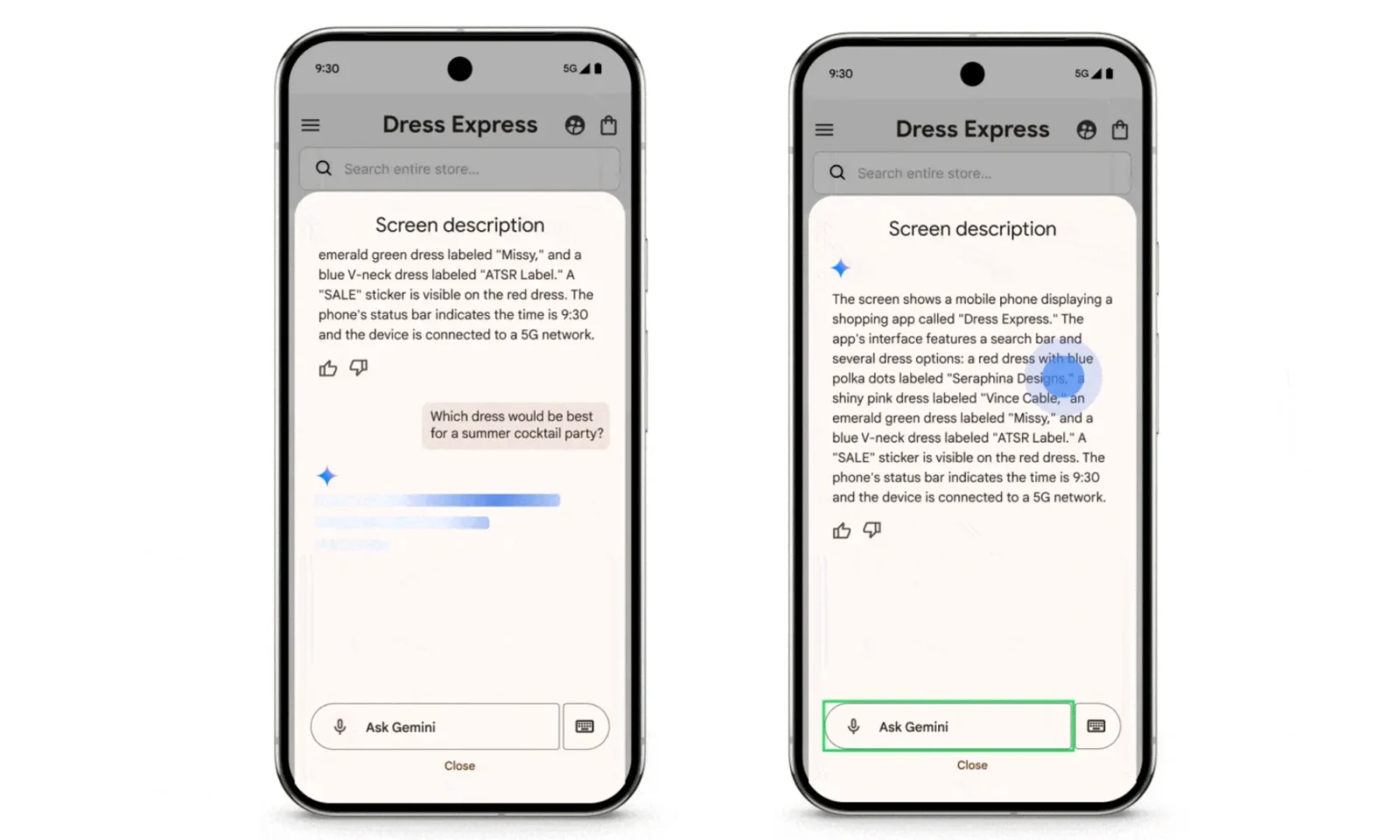

A new “Describe Screen” option within the TalkBack menu enables this functionality more broadly. When browsing image-heavy content like online catalogs, Gemini can now describe what’s visible and respond to queries such as which outfit might be best suited for cold weather, or whether a product on the screen is discounted. It also supports screen-wide analysis, helping users navigate complex pages that would otherwise be visually overwhelming.

In addition to vision accessibility, Google is also addressing needs of the hearing impaired. Chrome on Android now includes “Expressive Captions,” an upgrade to its auto-captioning system. Instead of transcribing spoken words verbatim, the captions will reflect tone and emotion—turning a flat “goal” into a drawn-out “goooaaal” when appropriate. These captions are also sensitive to background audio cues like cheering or whistles, providing a richer experience for users who rely on text to follow video content. Expressive Captions will roll out with Android 15 in select English-speaking regions, including the US, UK, Canada, and Australia.

Another usability-focused improvement is adaptive text zoom in Chrome. While Android already offers page zoom, increasing text size has traditionally caused layout issues. The updated system now adapts the page layout to preserve readability without disrupting the overall structure. Users can fine-tune zoom levels using a new slider and choose whether to apply settings universally or on a per-site basis.

Together, these features represent a practical shift toward making Android and Chrome more inclusive. Rather than layering on flashy AI tools, Google appears to be focusing on how artificial intelligence can address real usability gaps—particularly for users navigating digital spaces with sensory limitations.